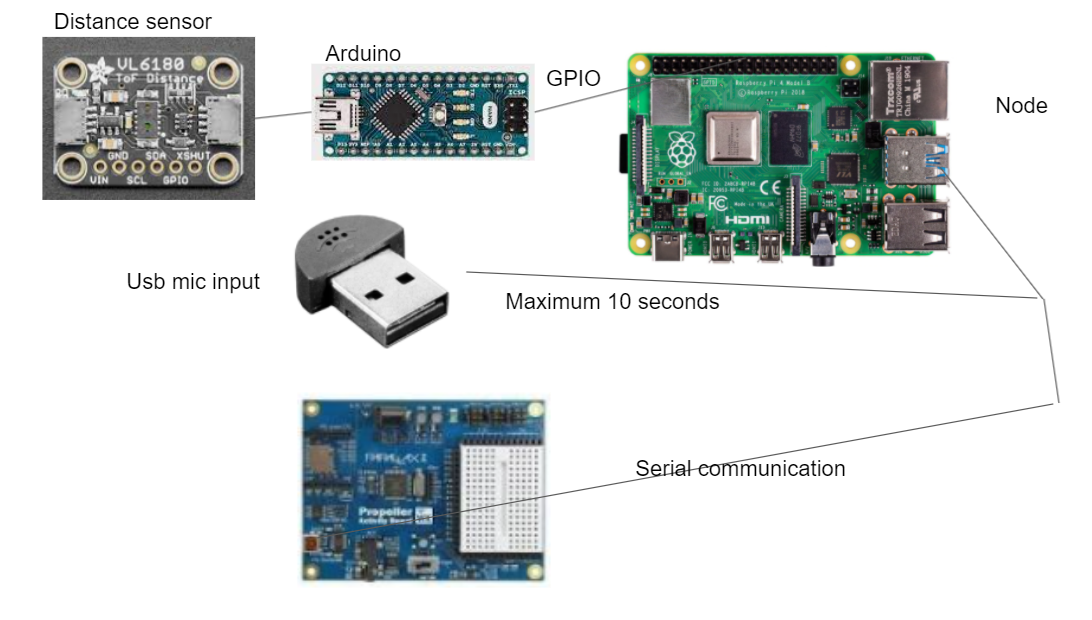

whole system

I am using an arduino+ a raspberry Pi + a propeller. Arduino: getting distance sensor data Propeller: controlling motor Raspberry Pi: run tone recognition and control everything.

Code Source

All the code is available at this github link. here is the code source

Propeller

source code

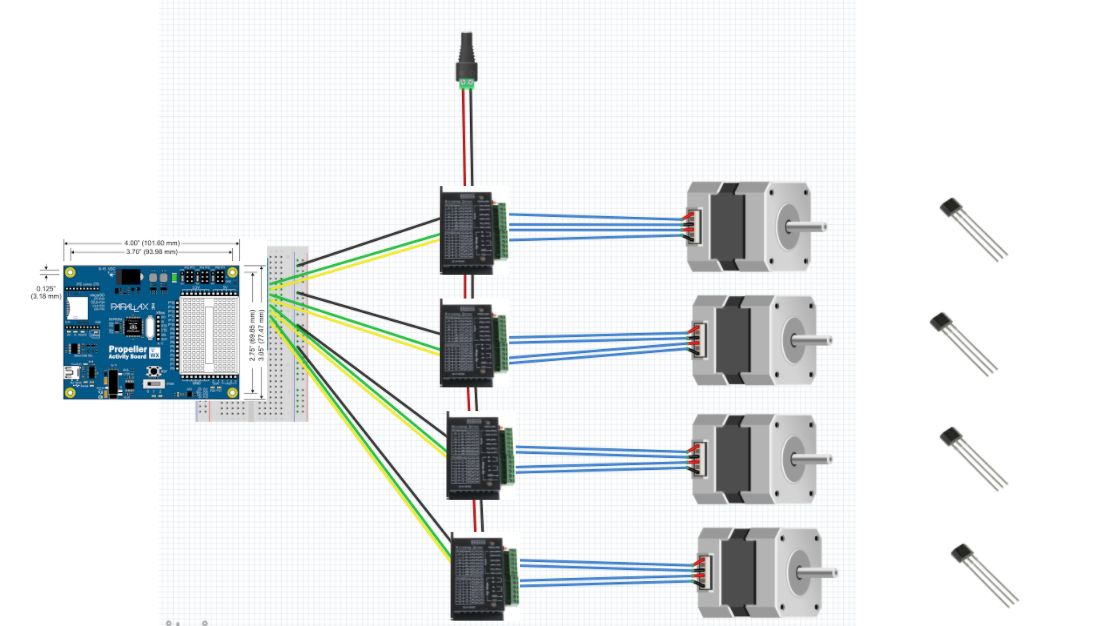

I am using a TB6600 and a 42 stepper motor with a torque of 0.42N/m. I basically follow the tutorial here. tutorial

So this is the diagram for the propeller side.

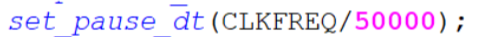

I also set the delta time division to be more divided in propeller.

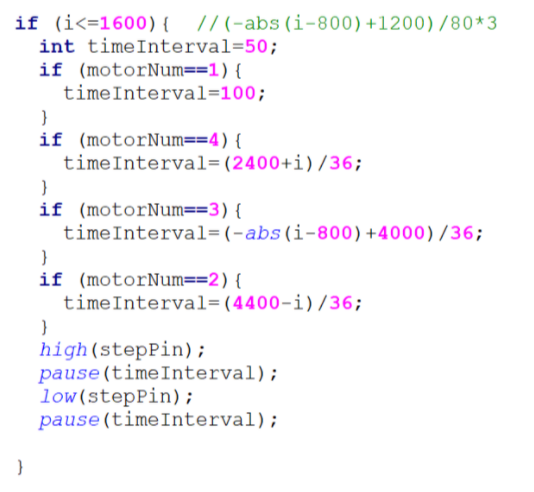

I also programmed the sound of the stepper motor to be correspond to the tone of languages.

I also add the hall sensor to sense the movement so that everytime, the stepper motor would stop at proper position.

Arduino

source code

Arduino side is really simple, I use a VL6180 to sense if anyone is approaching the microphone or not.

I cannot do this on Raspberry Pi because the sensor’s library do not have a node version.

Raspberry Pi

source code

I am using node for raspberry pi and here are the node packages I used for this project.

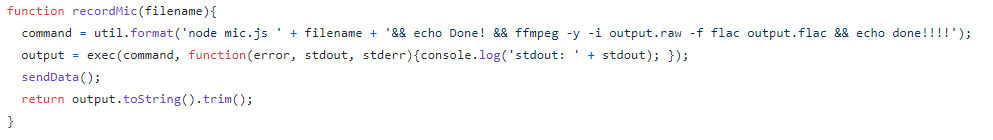

I think I am having the biggest struggle with the microphone input issue because it is hard for me to stop the stream and do another mic input. Then Pedro helped me by figuring out how to call a node inside another node.

By “node mic.js” inside anther child process command, it would execute the “mic.js” file and do a recording of the microphone input.

Then ffmpeg would convert the file from .raw to .flac for watson to recognize it.

Then watson API would do voice recognation and pinyin node package would get the tone value from transcipt.